Not surprisely, F# is a useful tool for all sorts of random tasks, web scraping among them. Today I’ll take a quick side-track and leverage F# to web scrap some COVID-19 data and create some charts.

Pennsylvania provides daily COVID-19 stats on their website. This is great, but there are charts I’d like to see that they don’t provide. This is easy enough to resolve by doing my own data collection and charting; enter web scraping. As anyone who has done it before, they know scraping data can sometimes be a messy and error-prone process. Luckily FSharp.Data provides some useful HTML parsing capabilities to make this process easier. I would also be remiss if I didn’t mention, github.com/tomswartz07 inspired me to whip this little project together. He uses Python, but I borrowed his data identification method, with a few F#-style improvements. Ok, time to do some setup.

1 | dotnet new console -lang F# -n Covid19 |

1 | open System |

As with most web scraping, this can be a touchy and/or dirty process. Luckily in this particular case the data is relatively simple to parse. I just need to grab the table rows <tr>. I can then transform each row’s set of <td> into an array of strings. After that I just need to filter to only the rows I want. Again, I luck out with some pretty easy demarcation of the desired rows. The page reports at the county level detail, but I only feel like tracking totals for the state. As a result, I’ll do some some quick column level summing. This will take the target webpage down to a timestamped row of data.

1 | let getData () = |

Once I have the data, I need to store it. Since this is meant to be lightweight, I’ll just drop the data to a csv. I want the script to be able to run multiple times a day, but I don’t want multiple date entries. Using a Map keyed by timestamp is a simple solution. More robust or performance sensitive solutions would call for at least something like SQLite, but a csv is good enough for now.

1 | let insertData dataFile = |

Once the data is collected, I want to show some simple trending over time. Granted, this has nothing to do with web scraping, but once I have the data I need to show a chart or two. Again, F# offers a decent charting option for quick visualizations.

1 | let chartData dataFile = |

The final piece is providing some control to determine what functionality I want to perform.

1 |

|

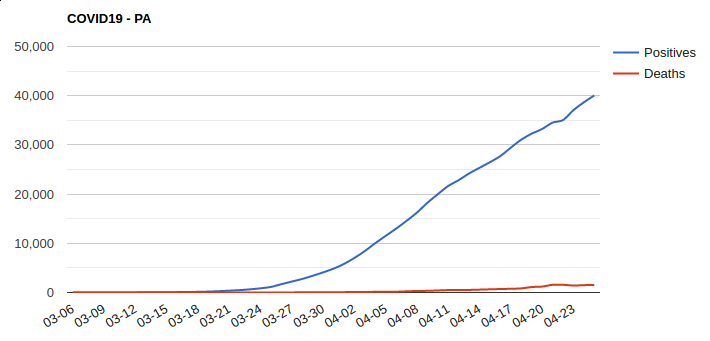

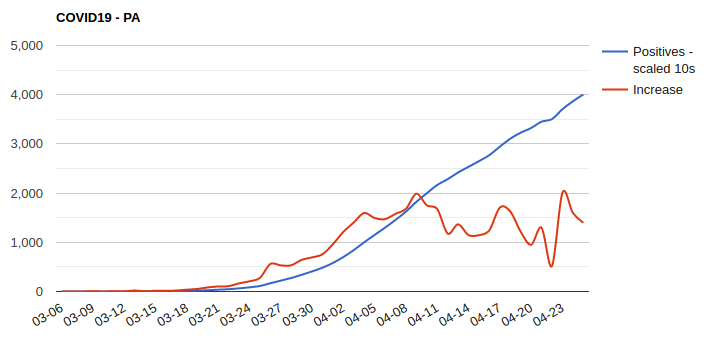

Below are resulting charts from the data. The first is cummulative data over time. The second is the daily increase in positive tests with a cummulative scaled positives overlayed. As the second chart shows, in the beginning of April the daily increases level off (relatively good news, and perhaps a good sign).

This has been a brief look into how F# can be used for some quick and dirty web scraping (and charting). It isn’t real sexy, but hacking quick things like this together with F# is a useful tool to have in the toolbox. Until next time.